How to adapt traditional usability principles for the unpredictable world of large language models and AI assistants.

For over two decades, I’ve guided product teams through the intricate process of user experience design and testing. The foundational principles of usability, such as Jakob Nielsen’s ten heuristics, have served as a constant Guiding Principle. These principles remind us that good design should keep users informed, match their mental models, prevent errors, and offer control. However, the advent of generative AI—tools like Microsoft Copilot, Google Gemini, and bespoke digital assistants—has introduced a paradigm shift that challenges our traditional research playbook.

A typical procedure for testing any new product or service involves designing a prototype and conducting user research to determine if the concept is viable and if the usability is satisfactory. While this process works well with a static prototype, it is not easily applicable to generative AI tools. The core of the challenge lies in one critical difference: predictability.

The Unpredictability Problem: Why Traditional UX Methods Fall Short

A traditional product or feature typically has an ideal user journey or flow—a path the user would take to accomplish a task. The product team’s focus is to make this journey intuitive. That journey is usually the simplest way for the user to accomplish their goal, and well-designed interactions influence most users to take the quickest route.

Generative AI shatters this model.

Its output is not something that can be easily predicted, mapped, and prototyped. Each interaction is a highly unique combination of the user’s input and the tool’s output, contingent on the quality and breadth of the underlying data. Furthermore, generative AI has its own probabilistic quirks; the same prompt can produce slightly different results. The user journey depends on a complex interplay of factors:

Prompting Skill: A novice user may get poor results, while an expert can extract immense value.

Conversational Design: How the AI guides the conversation and handles ambiguity.

Data Accuracy & Recency: The quality of the information the AI draws upon.

This creates an added layer of complexity that touches on usability but is not usability as we know it. This interaction is difficult to explore using a static prototype, which typically assumes an ideal scenario. Users are unpredictable, their behavior shaped by past experiences, personality traits, and the UI elements presented to them.

So, how can we effectively test these tools? Based on my experience working with generative AI applications, a multi-faceted research approach is essential. Here are the methods that have proven effective.

Method 1: Heuristic Evaluation for AI Usability

The first line of defense against a poor user experience is a rigorous heuristic evaluation. This method is cost-effective and quick, allowing for rapid iteration before involving users.

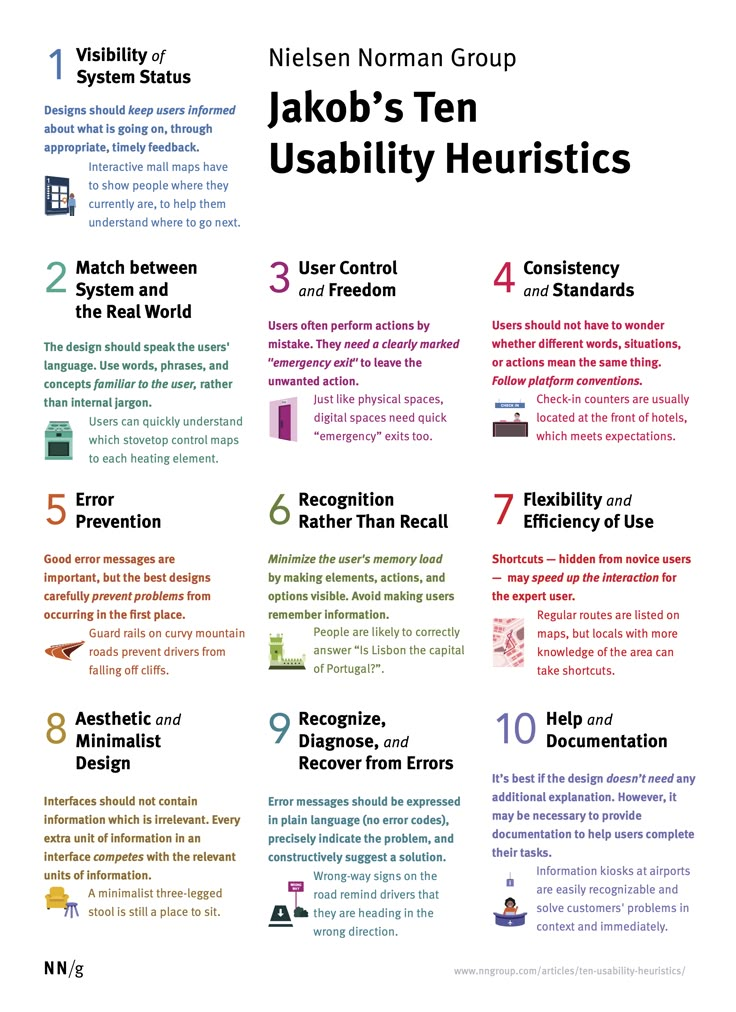

The Foundation: Revisiting the 10 Usability Heuristics

Jakob Nielsen’s heuristics are more relevant than ever, but they must be interpreted through an AI lens. Let’s break down how they apply:

Visibility of System Status: The AI must clearly communicate what it is doing. Is it “thinking,” generating, or retrieving information? A simple animation or message like “Searching knowledge base…” is crucial. Users should never be left wondering if the tool has frozen.

Match between System and the Real World: The AI should speak the user’s language, not technical jargon. It should understand domain-specific terms and respond in a natural, conversational tone. For instance, an AI for lawyers should understand legal terminology without requiring overly formal prompts.

User Control and Freedom: Users need an “emergency exit.” This could be a prominent “Stop generating” button, a “New chat” option, or the ability to easily edit their previous prompt without starting over. The undo function is as critical here as in a word processor.

Consistency and Standards: The AI’s behavior and voice should be consistent. If it uses a friendly, informal tone in one response, it shouldn’t switch to a robotic, formal tone in the next. It should also adhere to platform conventions (e.g., where settings are located).

Error Prevention: The best design prevents errors. For AI, this means designing the interface to guide users toward better prompting. This could include placeholder text with prompt examples, buttons for common follow-up questions, or real-time suggestions as the user types.

Recognition Rather Than Recall: Minimize the user’s memory load. Instead of making users remember what they already asked, the interface should show the conversation history clearly. Offer suggested next steps or allow users to easily reference earlier parts of the conversation.

Flexibility and Efficiency of Use: Cater to both novices and experts. Novices might need guided prompts, while experts will appreciate keyboard shortcuts, the ability to save and reuse effective prompts, or advanced settings to fine-tune the output.

Aesthetic and Minimalist Design: The chat interface should be clean and uncluttered. Irrelevant information competes with the AI’s responses. The focus should be on the conversation.

Help Users Recognize, Diagnose, and Recover from Errors: Error messages are inevitable. Instead of a cryptic “404” or “I don’t understand,” the AI should explain the problem in plain language and constructively suggest a solution. For example: “I couldn’t find a specific policy on that topic. You might try rephrasing your question or searching the HR portal for ‘vacation time’.”

Help and Documentation: While the goal is for the tool to be self-explanatory, some documentation is usually needed. This should be easy to find and context-sensitive. An “i” icon next to the prompt box that explains prompting basics is far more effective than a separate 50-page manual.

How to Conduct an AI Heuristic Evaluation

Tools Needed:

Spreadsheet software (Google Sheets, Airtable, or Excel)

Screen capture tool (e.g., Loom, or simply screenshots)

The generative AI tool itself (staging environment preferred)

Inputs:

A list of the 10 heuristics.

A set of predefined test prompts and scenarios that cover a wide range of use cases, including edge cases.

Process:

Create a Testing Script: Go beyond happy-path scenarios. Deliberately ask about things you know are not in the database to test error handling. Try ambiguous prompts to see how the AI seeks clarification.

Systematic Review: For each heuristic, walk through your test prompts. Does the design comply with the principle? Note specific violations.

Evaluate Affordances: Look for shortcuts (e.g., suggested follow-up prompts). Is there enough information to guide the user? Is the user kept informed during generation?

Assess Consistency: Evaluate the outputs for consistency in tone, format, and adherence to any style guides or industry standards.

Expected Outputs:

A prioritized findings report using a severity scale (e.g., 0-4 or Critical/High/Medium/Low).

A color-coded grid (see example below) that maps heuristic violations to specific parts of the interface, providing a visual overview for the team.

Annotated screenshots for each finding to provide clear, actionable context.

Example Prioritization Grid:

| Heuristic Violated | Location / Example | Severity (H/M/L) | Recommendation |

|---|---|---|---|

| #1 Visibility of Status | No indicator during long generation (>3 sec) | M | Add a pulsating “AI is thinking” animation. |

| #5 Error Prevention | No prompt suggestions for new users | H | Add example prompts as placeholder text. |

| #9 Error Recovery | Error message: “Input not recognized.” | H | Change to: “I’m not sure I understand. Could you try rephrasing your question?” |

Recommended Framework:

Nielsen Norman Group’s Severity Rating: A well-established framework for prioritizing usability problems. (Reference: https://www.nngroup.com/articles/how-to-rate-the-severity-of-usability-problems/)

Method 2: Systematic Accuracy and Output Testing

Usability is futile if the information provided is incorrect. Accuracy testing is a non-negotiable, parallel track to usability research. This can and should be conducted internally before any user-facing testing.

How to Test for Accuracy

Tools Needed:

Spreadsheet for test cases and results tracking.

Access to the AI’s underlying knowledge base or data sources.

A “source of truth” (e.g., official documentation, validated data sets).

Inputs:

A comprehensive list of knowledge domains the AI is expected to handle. Go broad to uncover gaps or outdated information.

A set of “ideal prompts” designed to retrieve specific pieces of information from each domain.

Process:

Audit the Knowledge Base: Work with subject matter experts to identify and remove or archive old, invalid, or contradictory documents. Garbage in, garbage out is the fundamental rule of AI.

Define Test Parameters: For each test prompt, track:

Accuracy: Is the information factually correct?

Completeness: Did the response cover all key aspects, or did it require extra prompting?

Source Citation: Are sources provided? Are the cited sources accurate and relevant?

Handling of Unknowns: What happens when the information is not available? Does it admit it doesn’t know, or does it “hallucinate” an answer?

Brevity vs. Detail: Is the summary useful, or is critical information missing?

Execute and Document: Run the prompts systematically. Log the outputs against your parameters.

Expected Outputs:

A detailed accuracy audit report highlighting specific areas where the AI provides incorrect, incomplete, or misleading information.

A gap analysis of the knowledge base, identifying topics that need more or better source material.

Specific examples of hallucinations or poor summarization that need to be addressed through model tuning or prompt engineering.

Best Practices:

Involve Subject Matter Experts (SMEs): They are essential for validating the accuracy of outputs.

Test at Scale: Automate regression testing for accuracy where possible to ensure new data additions don’t break existing functionality.

Reference: Google’s PAIR Guidebook: The “People + AI Research” guide offers excellent frameworks for evaluating AI models, including fairness and accuracy. (Reference: https://pair.withgoogle.com/)

Method 3: Usability Studies to Uncover Mental Models and Expectations

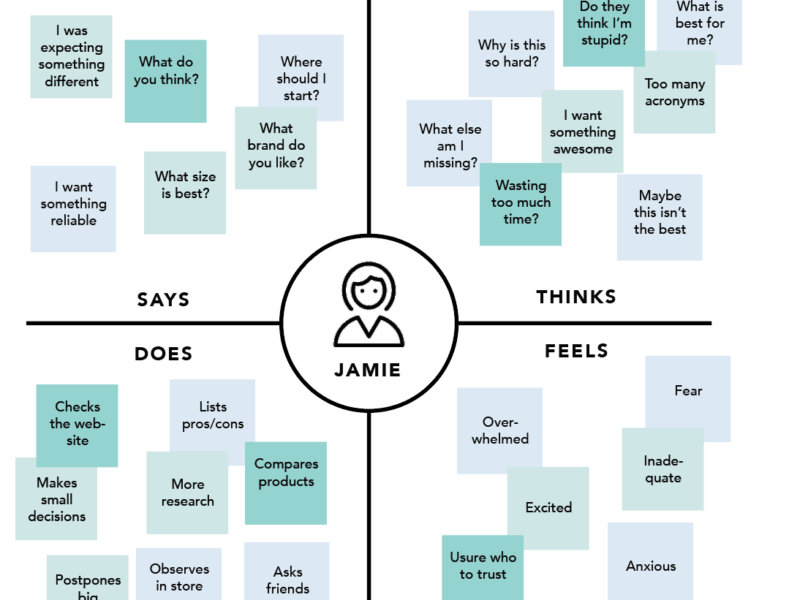

This is where we bring in the users. The goal here is not to test the AI’s knowledge, but to understand how users expect to interact with it and how their mental models influence their success.

The Mental Model Clash: Search vs. Conversation

For decades, users have been trained to search using keywords. The mental model for search is “I enter a few words, and I get a list of links to explore.” Generative AI requires a more conversational mental model: “I ask a full-sentence question, and I get a direct, summarized answer.”

This disconnect is a major barrier to adoption. A usability study is designed to expose this gap.

How to Conduct a Generative AI Usability Study

Tools Needed:

User recruiting platform (e.g., UserInterviews.com)

Video conferencing software with screen-sharing (e.g., Zoom)

Session recording and note-taking software (e.g., Dovetail, EnjoyHQ)

Inputs:

A carefully recruited set of participants matching your target personas.

A research protocol that includes both task-based scenarios and free exploration.

Process:

Hybrid Protocol:

Task-Based Section: “Please find information about the company’s parental leave policy.” This allows you to compare performance across participants.

Free Exploration Section: “Now, please use the tool to find out anything you’d typically need to know for your job.” This reveals natural behavior and unprompted expectations.

Observe and Probe:

Pay close attention to the first prompt. It reveals their initial mental model.

Ask “Why?” Why did you phrase it that way? What kind of answer were you expecting?

After a response, ask: “Did this give you all the information you were hoping for?” This is a direct success metric.

Observe refinement behavior: When the output is poor, what do they do? Do they add more keywords? Do they try a more conversational question? Do they give up?

Focus on Expectations: The key insight is not just whether they completed the task, but whether the AI’s behavior matched their expectations of a helpful assistant.

Expected Outputs:

Rich, qualitative data on user mental models, frustrations, and moments of delight.

Archetypes of users based on their prompting style and expectations (e.g., The Searcher, The Conversationalist, The Skeptic).

A list of common misconceptions and gaps in user understanding that need to be addressed through onboarding, tooltips, or interface design (e.g., “Users don’t know they can ask follow-up questions conversationally”).

Actionable recommendations for designing the onboarding experience and in-the-moment help features.

Recommended Framework:

Cognitive Walkthrough: Adapted for AI, this method focuses on evaluating the learnability of a system for new users. It forces the researcher to ask: Will the user know what to do to achieve their goal? (Reference: https://www.interaction-design.org/literature/article/cognitive-walkthrough)

Why This Triangulated Approach Matters

By combining these three methods—Heuristic Evaluation, Accuracy Testing, and Mental Model-focused Usability Studies—product teams can build a holistic picture of their generative AI tool’s strengths and weaknesses.

This approach allows you to:

Find and fix critical usability issues early, preventing user frustration and abandonment.

Optimize for accuracy and reliability, building user trust—the most valuable currency for any AI application.

Bridge the gap between user expectations and AI capabilities through targeted education and thoughtful interface design, dramatically reducing the barrier to adoption.

Conclusion

Testing generative AI requires us to expand our UX research toolkit. We can no longer rely solely on prototyping predictable flows. Instead, we must adopt a multi-pronged strategy that assesses not just the interface’s usability but also the quality of its output and its alignment with deeply ingrained user mental models. By applying adapted heuristic evaluations, rigorous accuracy checks, and insightful usability studies, we can guide the development of generative AI tools that are not only powerful but also intuitive, trustworthy, and ultimately, adopted. The principles of good UX, as defined by pioneers like Nielsen and Norman, remain our foundation, but our methods must evolve to meet the unique challenges of this new technology.

References and Further Reading:

Nielsen Norman Group: 10 Usability Heuristics for User Interface Design

Nielsen Norman Group: How to Rate the Severity of Usability Problems

Google PAIR (People + AI Research) Guidebook

Interaction Design Foundation: Cognitive Walkthrough

Microsoft: Guidelines for Human-AI Interaction

Apple: Human Interface Guidelines for Machine Learning